What are "Tokens"?

What are "Tokens"? somebodyIn the new age of Artificial Intelligence and it's use in search, "tokens" could be considered the new basis for cost. Every conversational question you ask, and every response you receive, costs tokens.

We provide a number of definitions and examples below, but suffice it to say that the more tokens you use, the less queries/searches you can make. The less tokens you use, the more queries/searches you can make.

From the AI itself:

"Tokens are like credits that are used up each time you ask the AI a question. Each question costs a certain number of tokens based on its length and complexity.

You can think of it like using minutes on a cell phone plan - every call you make uses up some of your available minutes. With the AI, every question uses up some of your available tokens.

The more complex or lengthy your question is, the more tokens it will cost to generate an answer. Questions with more words or more research required will use up more tokens.

When you start running low on tokens, you may need to purchase more credits to keep asking the AI questions. Buying additional tokens allows you to replenish your supply.

The goal is to be as clear and concise as possible in your questions. This will minimize the tokens needed and allow you to get more use out of your token balance.

Being aware of how tokens work allows you to manage costs and budget the number of questions you can ask the AI system within your allotted tokens. Careful phrasing keeps the tokens required as low as possible."

And, from another AI:

"In the context of AI systems, a token is a unit of value that allows users to access and utilize the AI's capabilities. Here is a plain definition of what a token is:

A token represents a credit that can be exchanged in order to get the AI system to perform work and provide output. Tokens act as a form of currency within the AI platform.

When a user submits a request like a query or question to the AI, a certain number of tokens are deducted from their account balance as a "cost" for the AI generating that output.

The number of tokens charged depends on factors like the length of input, complexity of processing required, amount of output generated, and overall demand on the system. More demanding requests cost more tokens.

Users start with an initial supply of tokens and can purchase additional packages of tokens as needed to replenish their balances. Acquiring more tokens allows for more queries and use of the AI system.

The supply, cost, and usage of tokens is controlled and optimized by the AI platform provider. Users simply see their token balance deducted when making requests, prompting them to buy more when running low.

So in summary, a token is a proprietary unit of value within an AI platform, representing credits exchanged to get AI processing power and outputs. Tokens enable usage metering and pricing for AI capabilities."

From OpenAI's "What are tokens and how to count them?"

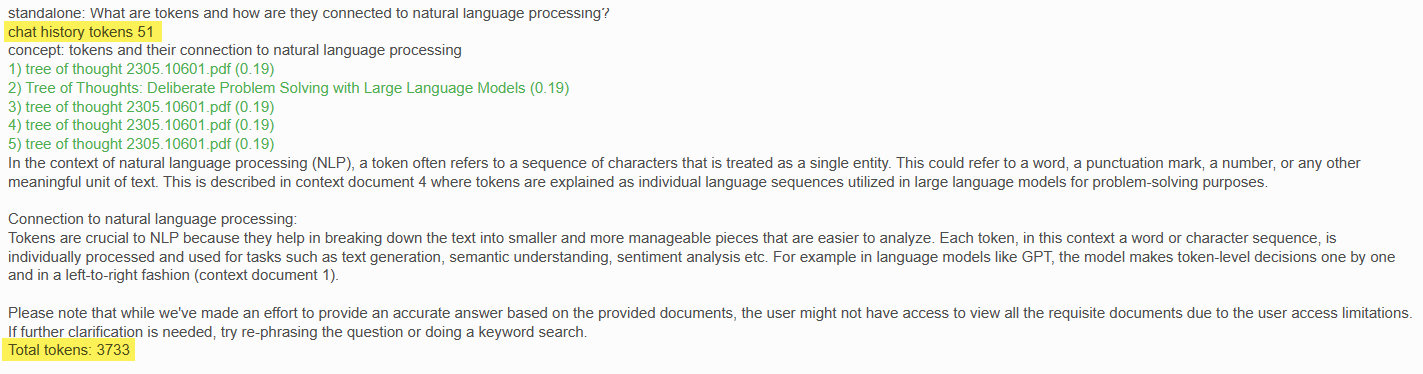

Tokens can be thought of as pieces of words. Before the API processes the prompts, the input is broken down into tokens. These tokens are not cut up exactly where the words start or end - tokens can include trailing spaces and even sub-words. Here are some helpful rules of thumb for understanding tokens in terms of lengths:

-

1 token ~= 4 chars in English

-

1 token ~= ¾ words

-

100 tokens ~= 75 words

Or

-

1-2 sentence ~= 30 tokens

-

1 paragraph ~= 100 tokens

-

1,500 words ~= 2048 tokens

To get additional context on how tokens stack up, consider this:

-

Wayne Gretzky’s quote "You miss 100% of the shots you don't take" contains 11 tokens.

-

OpenAI’s charter contains 476 tokens.

-

The transcript of the US Declaration of Independence contains 1,695 tokens.

How words are split into tokens is also language-dependent. For example ‘Cómo estás’ (‘How are you’ in Spanish) contains 5 tokens (for 10 chars). The higher token-to-char ratio can make it more expensive to implement the API for languages other than English.

To further explore tokenization, you can use our interactive Tokenizer tool, which allows you to calculate the number of tokens and see how text is broken into tokens. Alternatively, if you'd like to tokenize text programmatically, use Tiktoken as a fast BPE tokenizer specifically used for OpenAI models. Other such libraries you can explore as well include transformers package for Python or the gpt-3-encoder package for node.js.

How many words are in a token?

The number of words per token can vary across different AI systems, as there is no universal standard. Tokens generally represent the total processing cost rather than just word count. Here are a few key points:

- Tokens are not directly equal to a fixed word count. The relationship is more complex.

- In addition to length of input, tokens also factor in processing intensity and output generated. Short inputs can still cost multiple tokens if complex.

- Rough estimates based on some AI systems equate 1 token to 4-8 words on average. But this can fluctuate up and down significantly.

- Systems like Anthropic's Claude charge about 1 token for every 4 words of input text. So around 4 words per token on average.

- Other systems like OpenAI may be closer to 1 token per 8+ words on average based on pricing tiers.

- Questions requiring more research, references, output length, etc will cost more tokens regardless of word count.

So in summary, tokens do not equate to a static number of words universally across AI systems. The ratio depends on the specific implementation, but is often estimated around 4-8 words per 1 token on average as a rough guideline. The relationship between word count and tokens is more nuanced in practice.