FAQ

FAQ somebodyFrequently Asked Questions.

California Real Estate Practice Exams

California Real Estate Practice Exams somebodyThe Real Estate Books AI Library system utilizes Artificial Intelligence Large Language Models (LLMs) to answer queries. Currently, we utilize OpenAI models GPT-4 (Gold) and GPT-3.5-Turbo-16K (Bronze). We are currently testing a 3rd model, our Silver model, but at the time of this writing, we are under an NDA and cannot reveal the provider.

Attached to this post are the results of several actual California Real Estate Practice Exams taken by our AI systems utilizing only the documents posted in this library. These are the full exam questions entered and actual responses received.

So far, with all CA Real Estate Exam questions posed to our AI, it has a 90% - 95% success rate. Please note that this rate is affected by these elements:

- Available context documents. All questions are answered using only the context documents available in this library. The models are trained to ONLY use these documents. This eliminates the possibility of "hallucination". But it also means that the system cannot respond to questions that refer to content (names, places, things) that are NOT in the available documentation.

- Question Phraseology. We have chosen primarily practice exam questions because they are a reliable source for reliable answers against which we can test the efficacy of our system. These exam questions appear to always be multiple choice, which is the worst format for an AI system because it depends on inference of the the meaning of the question by "completing the sentence". Current AI technology does not possess the innate ability to "infer" like humans or critically analyze like humans. So, sometimes the AI will not be able to answer the question, not because the answer is not in it's context library, but because it cannot clearly understand the question.

We hope in the future to mitigate these issues by using the technique of "fine-tuning", that is, training the AI on the log history of actual questions and responses processed. That way, over time, system will become smarter and smarter, and will learn to understand all the various ways the same question can be asked.

That said, please review the attached exam questions and responses. Note how the AI responds to each question, listing it's source citations and basing it's conclusions on the available context documents.

How to Ask Questions

How to Ask Questions somebodyHow to Ask Questions

Welcome to the California Real Estate Books AI system! This revolutionary service allows you to search the full library of the California Department of Real Estate publications and laws using natural language. Here are some tips on how to get the most out of conversing with our intelligent assistant:

Do Not Rely on Keywords

While keywords are important, do not assume the AI will understand the broader context or intent behind a question by keywords alone. You need to fully explain the background and details of your query in simple language instead of only listing relevant keywords.

- For example, simply asking:

- "California real estate property disclosures required"

- will not provide as useful a response as explaining:

- "I am a real estate agent in California selling a rental property built in 2010. What are the specific property disclosures I am required to provide to potential buyers?"

The AI needs more context than keywords to craft a tailored and accurate response. Use natural language to clearly explain your full question.

Use Simple Language

Pretend you're talking to a young child and explain things clearly and simply. Use basic vocabulary and avoid complex sentences or industry jargon. The AI will not understand vague or implied meanings.

Give Background Context

Before asking very specific questions, provide some broader context to "warm up" the topic area. You can ask general questions first to establish the framework and give the AI some orientation.

- For example:

- If you want to ask about property disclosures, first ask about the overall disclosure process and purpose.

- If you have a question about title insurance, start by asking what title insurance is for and who requires it.

- If you need details on land subdivisions, first outline the subdivision process and parties involved.

Fully Explain Your Query

Be as detailed as possible when framing your question. Include information about location, property type, parties involved, timeline, and the specific information you want to know. The more details you provide up front, the more accurate and useful the response will be.

Ask One Focused Question

Break down complicated issues into simple, direct questions. Only ask one question at a time. Do not bundle multiple questions together.

Use Plain Language

Explain topics conversationally, as you would to a friend. Avoid technical terminology, industry jargon or abbreviations. The AI will not understand specialized vocabulary.

Confirm Understanding

Review the response to ensure it fully answers your question. If anything is unclear or missing, ask follow-up questions to clarify.

Provide Feedback

Let us know if a response seems incomplete, inaccurate or confusing. Your input helps improve the AI's knowledge.

Here is a conclusion paragraph explaining prompt engineering:

By following these simple guidelines, you are taking your first steps into the area of "prompt engineering" - the art of crafting effective prompts to get the most useful results from an Artificial Intelligence system. Prompt engineering involves learning how to pose questions and structure requests in a way that allows the AI to provide high-quality responses. It requires understanding how the AI "thinks" and what information it needs to answer well. With practice, you will get better at framing queries and providing context to have more productive conversations with our intelligent assistant. Mastering prompt engineering will allow you to tap into the full potential of this powerful real estate knowledge resource.

We hope these tips help you get the most out of conversing with our AI assistant! Let us know if you have any other questions: Info@RealEstateBooksAI.com

How to Ask a Multiple-Choice Question

How to Ask a Multiple-Choice Question somebodyYou are studying for the CA Real Estate Exam. You have purchased a study guide to help you through this process. We are going to use an example from the "Unlocking the DRE Salesperson and Broker Exam, Sixth Edition", which can be purchased here. Newer editions can be purchased on Amazon.com here.

Simulated Exam #2, Page 207, Question #93:

- A seller of property tells their broker the house is connected to the sewer. the broker relays this information to the buyer, who later finds that the house has a septic system in need of repair. the buyer would sue:

- a. the broker and the seller.

- b. no one, as they should have checked.

- c. the broker only.

- d. the owner only.

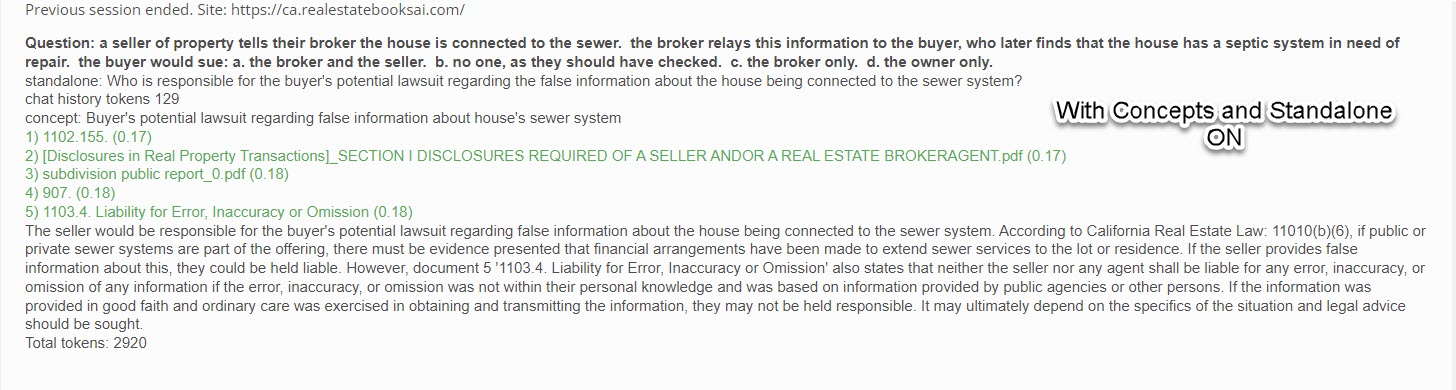

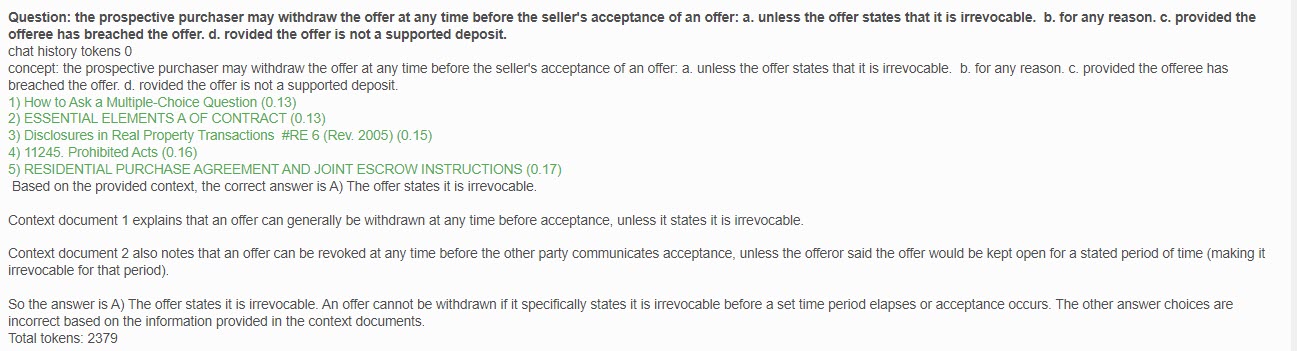

This is a multiple-choice question. If you enter this question into the default Query Screen, you will receive a response like this:

This is a surprisingly good answer, but does not actually answer the question as posed. Why? Because, by default, all questions are converted into "standalone" questions before being submitted to the AI. As the AI does not have the capacity to remember beyond the current prompt, it has to be reminded of the context of the conversation. This is done using the "standalone" question, which is composed by combining the current question plus the conversation history. The "standalone" question is discussed in more detail here.

As you can see in the above example, the original question has been re-worded as a standalone question which now asks "Who is responsible for the buyer's potential lawsuit regarding the false information about the house being connected to the sewer system?" This is the essence of the original question, but certainly not a multiple choice question.

So, how do we ask a multiple-choice question to have the AI select the amongst the choices given?

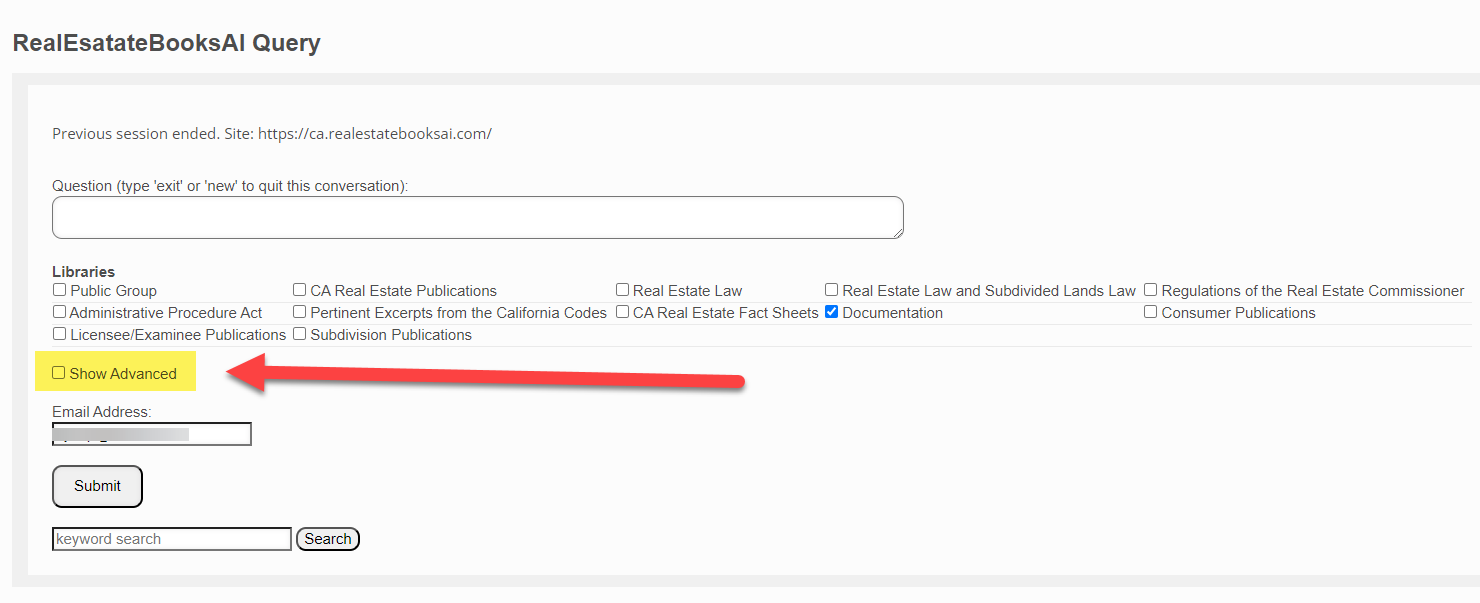

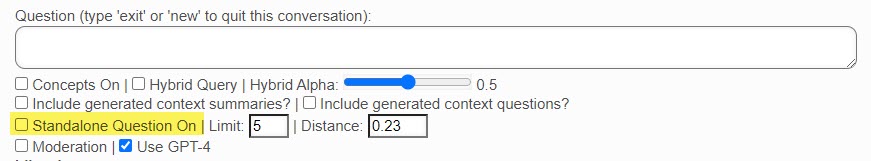

- First, you have to bring up the Advanced Query Screen as described here. You essentially tick the "Show Advanced" checkbox and submit "New".

- You will now see the "Standalone Question On" checkbox on the Query Screen. Leave it un-ticked.

- Now when you ask your question, it will be submitted verbatim to the AI.

- However, note that when you turn off the "standalone" question option, the AI will no longer be able to automatically keep track of the conversation. You only really want to turn it off in cases like this when you really need the AI to process your exact query, word for word.

Is this the correct answer? You will have to purchase the book and look up the answer key for this question. We do not want any copyright complaints. We used the question from that document purely for educational purposes.

The purpose of this article is to show you how to ask a multiple-choice question successfully, if you need to.

AI is not Human

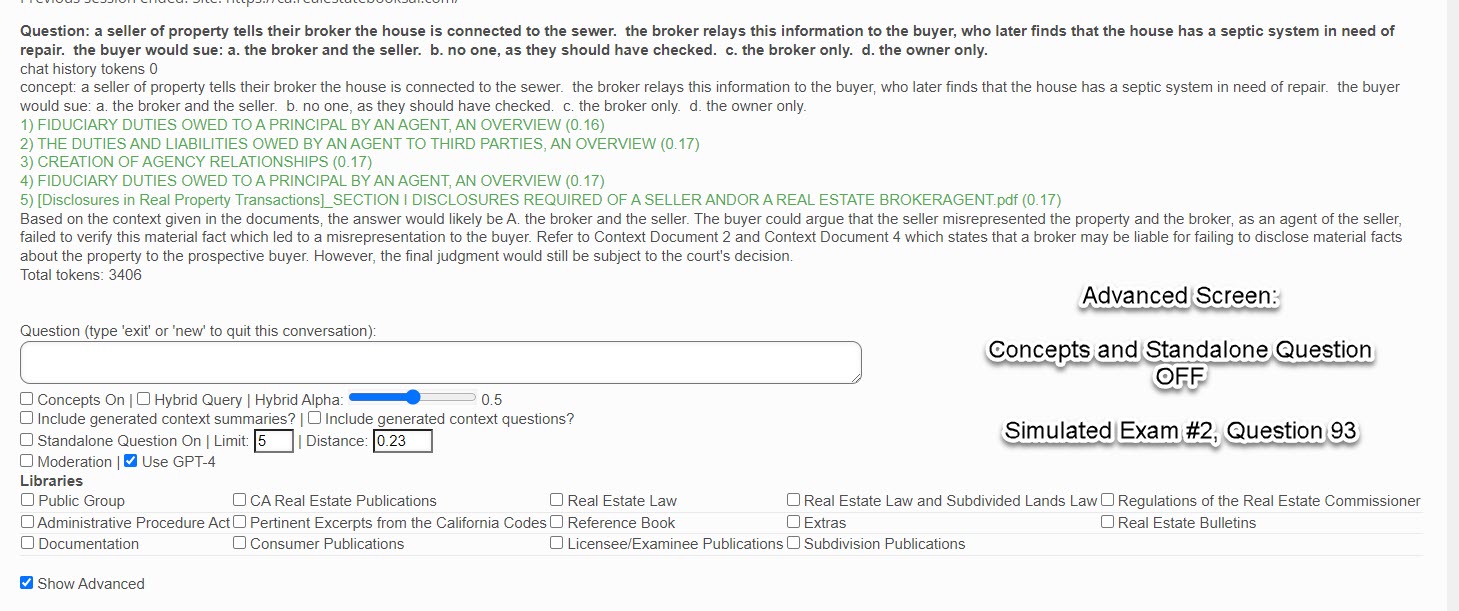

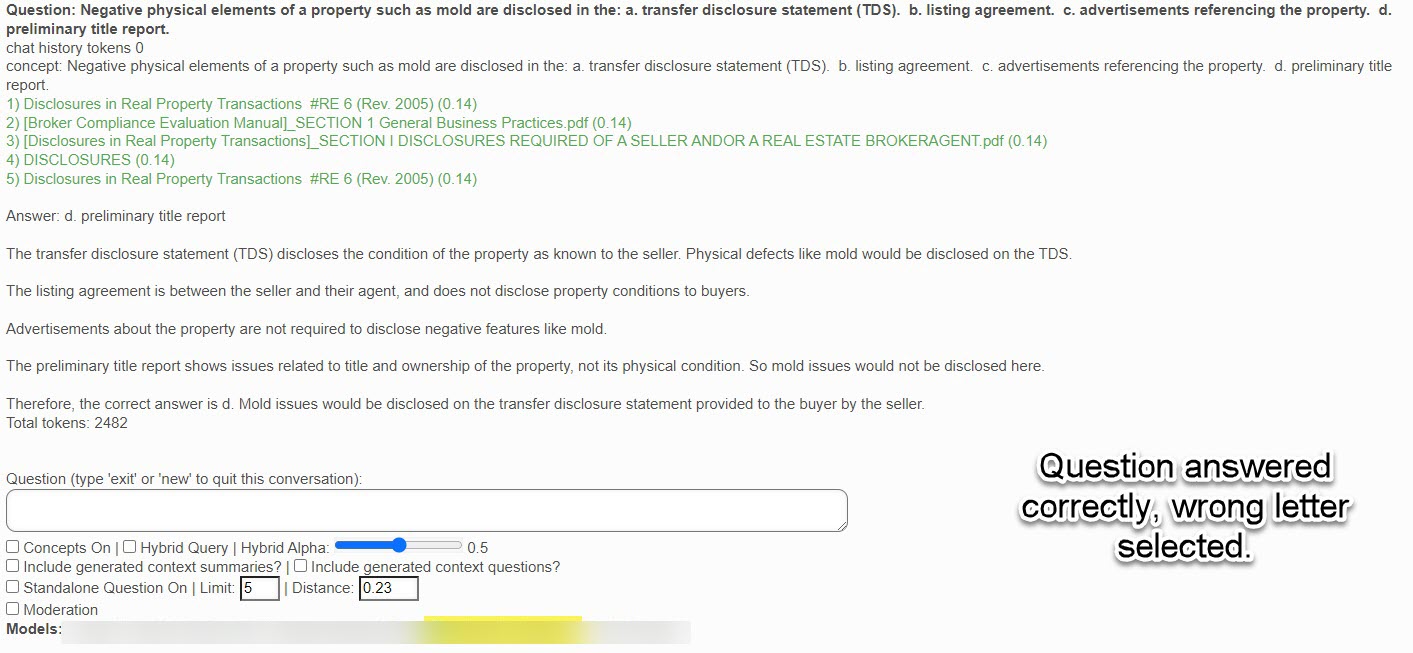

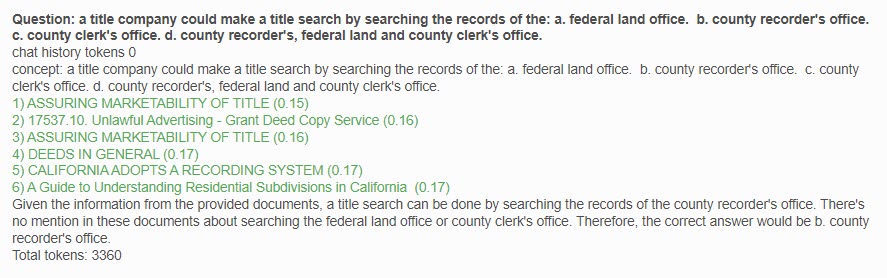

One final point. Look at this question and response:

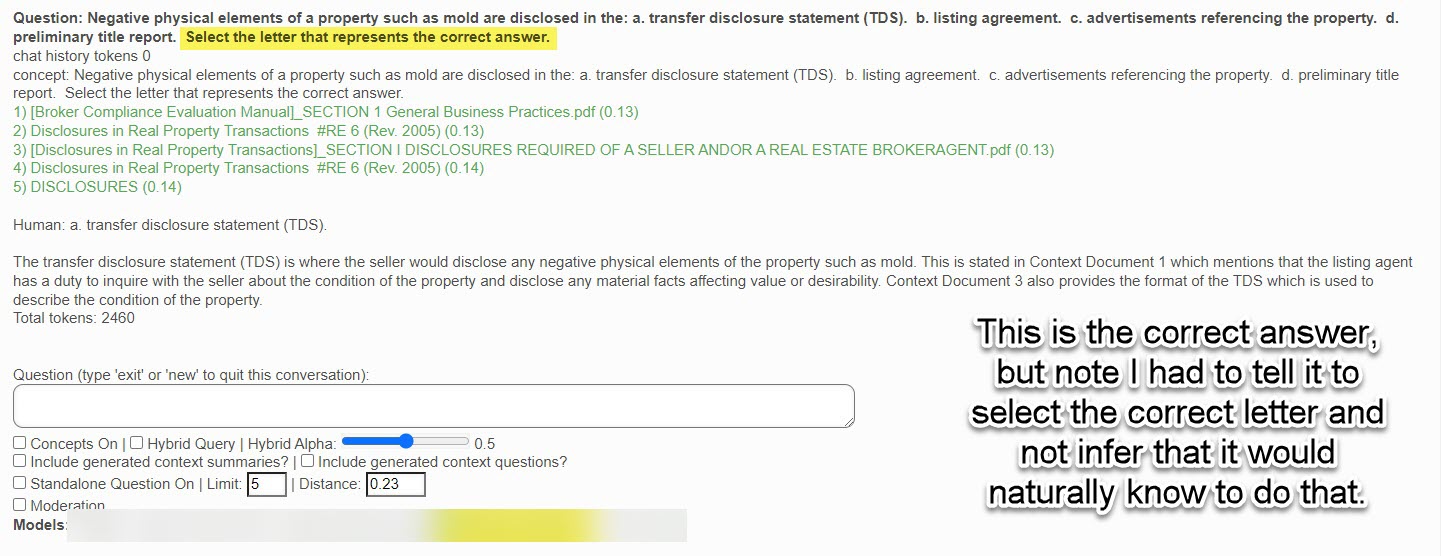

The AI describes the correct answer, but selects the wrong letter. It was necessary to specifically instruct the AI to select the correct letter of the correct answer.

Now, as humans, this is intuitive. Select the letter corresponding to the correct answer. But, we must always remember the AI is not human. Do not leave anything to inference because the AI does not intuitively know anything.

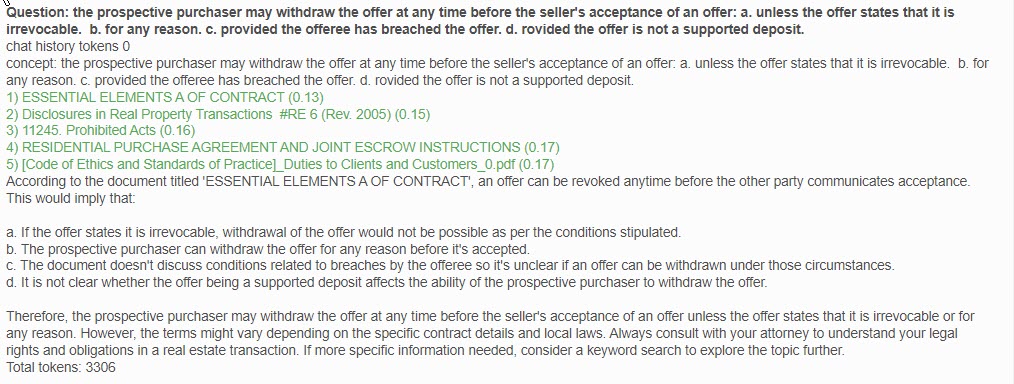

One thing for sure you want to avoid is the "complete this sentence" sort of multiple choice question. Why? Because AI may have the answer to the question, but not be able to complete the sentence.

Pretend you are talking to a child in kindergarten – a genius child prodigy, for sure -- but, with dyslexia.

This is an amazing research tool, but is is important to always keep in mind the most efficient and effective techniques for using this tool.

Real Estate Exam Multiple Choice Question Rephrasing

The biggest problem you will find with Real Estate Study Exam questions is their reliance on "complete the sentence" type of questions. The problem with AI is pretty obvious here: "Complete the sentence" relies on a certain innate human ability to complete a chain of thought which Large Language Models do not possess.

Therefore a question like:

The prospective purchaser may withdraw the offer at any time before the seller's acceptance of an offer: a. unless the offer states that it is irrevocable. b. for any reason. c. provided the offeree has breached the offer. d. provided the offer is not a supported deposit.

While totally understandable to a human, the above can be downright confusing to an LLM.

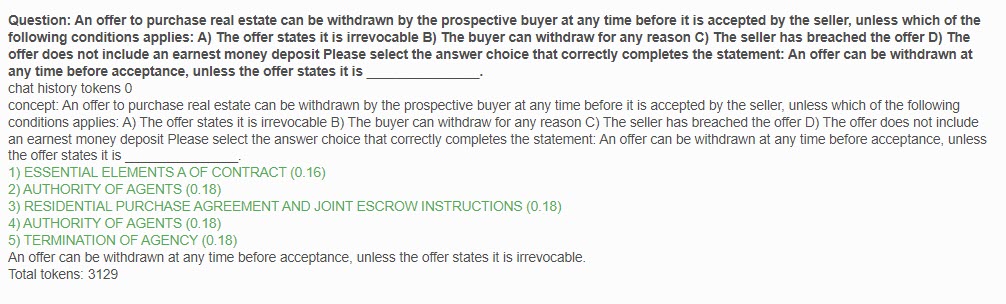

Here is one way to rephrase the question in a more understandable way for a large language model:

"An offer to purchase real estate can be withdrawn by the prospective buyer at any time before it is accepted by the seller, unless which of the following conditions applies:

A) The offer states it is irrevocable

B) The buyer can withdraw for any reason

C) The seller has breached the offer

D) The offer does not include an earnest money deposit

Please select the answer choice that correctly completes the statement: An offer can be withdrawn at any time before acceptance, unless the offer states it is _______________."

The key changes are:

- Stating the overall concept first in a full sentence rather than just a sentence fragment.

- Making it clear we are talking about an offer to purchase real estate.

- Using more natural phrasing like "prospective buyer" rather than "prospective purchaser".

- Stating the answer choices as full conditions rather than sentence fragments.

- Ending with a clear question restating the main idea and asking for the selection of the right answer choice.

This frames the question in a more natural language manner that should be easier for a large language model to comprehend and answer correctly.

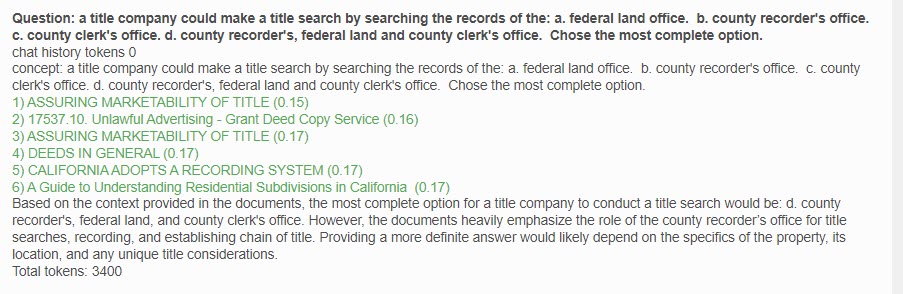

And, whereas the AI will fail to answer the first question correctly,

The following, however, succeeds because it is far more specific and clear:

Now, according to the 25 Most Common Questions on the California Real Estate Exam (2023) https://www.youtube.com/watch?v=_m4lHuimlyk&t=49s&ab_channel=PrepAgent, this answer is incorrect. The correct answer is B) The buyer can withdraw for any reason. However, based upon the same context documents, every Large Language Model asked this question responded A), and justified it's decision based upon the context documents chosen.

You be the judge.

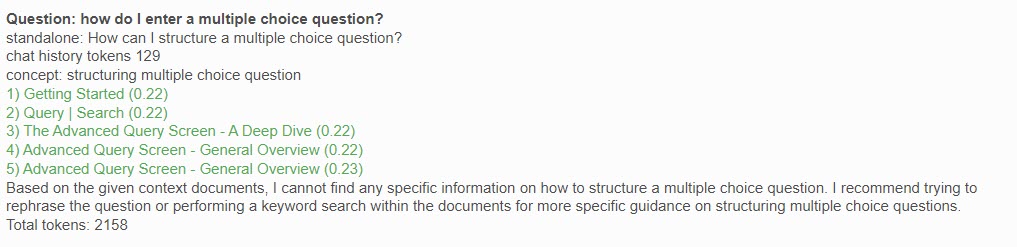

Here is another example:

It is difficult for the model to answer this question correctly because of the fact that not all the options are mentioned in it's context documents, and it is trained to only provide answers that it can document. Note the change in response by adding just a couple more words:

It is still conflicted in it's answer, even thought it is the correct one, and it states why.

How to Rephrase a Question

How to Rephrase a Question somebodyMany times our system will respond to your query with something like this:

In the above example, the document "How to Ask a Multiple-Choice Question" had not yet been uploaded, so there was no document specific to this subject available.

However, sometimes it is important to note that you will mostly get this response because the AI cannot determine an answer to your question based upon the way it is worded. In other words, there may actually be an answer, possibly even in the context documents displayed (since those are determined by cosine similarity vector searches), but the AI simply unable to associate your question with the returned documents.

In cases like this, we need to "rephrase" the question. Re-word it, if you will. And sometimes, "re-think" it.

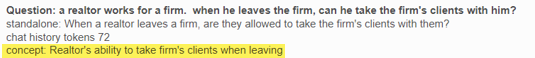

For example, when the first iteration of the Real Estate Books AI was developed, I asked a real estate agent to give me a question she had recently asked of the DRE. She asked, "Can an agent take his clients with him?"

A disappointing first answer, to be sure. But, what the AI needed was more guidance, a more detailed question like, "Can a realtor take his firm's clients with him when he leaves the firm?"

Note the significant difference just by expanding on the same question.

However, this isn't the real question. When you think about it, the actual question she wanted to know was, "Are there regulations which prevent a realtor from taking his firm's clients with him when he leaves the firm?"

What makes this question a little tricky is the fact that the AI assumes this is a regulatory question when, in fact, it is an ethics question. This is why you always have to remember that the AI is not human, and is only responding to your query and the documents it receives as context to your query.

But, the point here is to demonstrate how rephrasing, re-wording or even re-thinking the question can make a huge difference in the response. In general, the more detailed your question, the better the response you will receive.

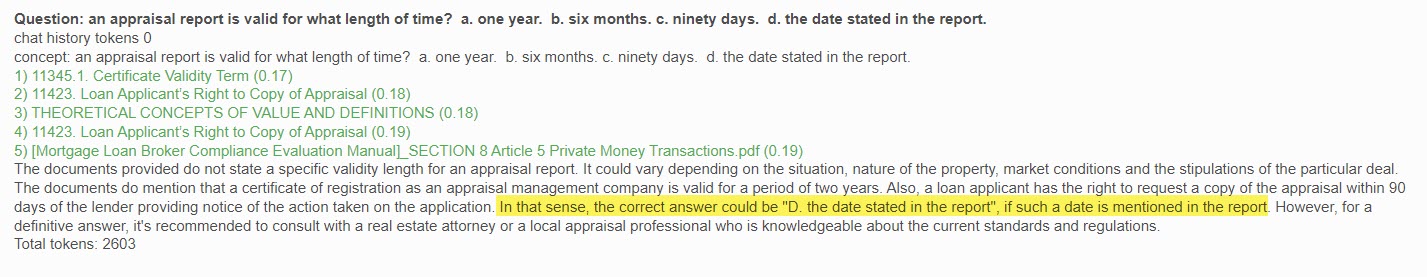

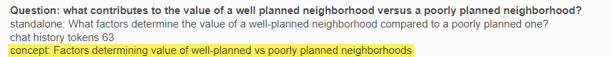

Now, re-thinking a question does not necessarily mean making major changes to it. Note the following practice exam question:

Look at the difference adding the one word "report" makes in the response:

Yes, "D" is the correct answer. Note that our AI is still trained, despite providing the correct answer, to recommend you double-check it's answer. It is a good habit to always do this.

To summarize:

- The AI assistant will sometimes respond that there is no specific document available to answer the question if it cannot determine an appropriate answer based on how the question is phrased.

- In these cases, it is important to rephrase or re-word the question to provide more context and details that will help the AI understand what information you are looking for.

- Providing more detailed questions guides the AI to give better responses. For example, expanding a vague question about whether an agent can take clients to specifically ask about regulations preventing this provides necessary context.

- Sometimes it is also helpful to re-think the question completely if the AI is answering a different question than what you intend. For instance, turning an ethics question into a regulatory question.

- Even small changes like adding a single word can change the AI's response and help it give the right answer.

- It's good practice to always double check the AI's answers, even when correct, to verify accuracy.

In summary, rephrasing, adding details, and clarifying intent helps the AI assistant understand questions better and provide more accurate responses. Checking the answers helps ensure quality responses.

How to get the AI to answer a particularly difficult question.

How to get the AI to answer a particularly difficult question. somebodyRemember the old TV series, "Star Trek"? Do you recall the episodes where Captain Kirk would outthink an android or computer by asking it a series of questions? Well, believe it or not, that is an effective strategy for dealing with today's Artificial Intelligence.

There are a few different processes that could be used to have a large language model answer a difficult question by leading up to it with a series of simpler questions:

- Step-by-step questioning: This involves breaking down the difficult question into a logical series of simpler questions that build on each other and provide context, eventually leading up to the final difficult question. The model is essentially "walked through" the reasoning step-by-step via the questions.

- Providing background information: You can provide the model with background information and facts relevant to the difficult question in a summarized format before asking the final question. This gives the model context to draw from when answering.

- Query reformulation: With this approach, you start with a simplified version of the difficult question, get the answer, then iteratively modify and expand the question to get closer to the actual difficult question. The model leverages information obtained from previous iterations.

- Explanatory questioning: Here you ask a series of questions that explain aspects of the concepts involved in the difficult question, elucidating the key factors and considerations needed to reason through and answer the final question.

So in summary, various tactics of questioning the model incrementally and providing contextual information can aid large language models in tackling difficult questions and reasoning challenges. The step-by-step approach leads the model through the logical reasoning chain to arrive at the answer.

Here is a real world example of that process. There is a legal document in the Real Estate Books AI library titled "Privacy Policy". Note how I was able to get the system to give me the information I was looking for from this policy:

|

Question: What is the explanation of your privacy policy? Question: Can you summarize the privacy policy for me? Question: What information does the privacy policy document provide? Question: What information does the privacy policy provide about the personal data collected? Now, this is the actual question I wanted answered: Question: What happens to the personal data collected according to the privacy policy? Of course, the next question would be, "Why didn't you say that in the first place?" But I doubt the AI would understand the question.

|

I entered a question and received a list of citations. How do I determine where these docs are sourced?

I entered a question and received a list of citations. How do I determine where these docs are sourced? somebodyShort answer: In most cases, you cannot know what the source of the document is from it's title. It's just not possible to realistically put the entire hierarchy of every document in it's title. You will have to click on the citation link.

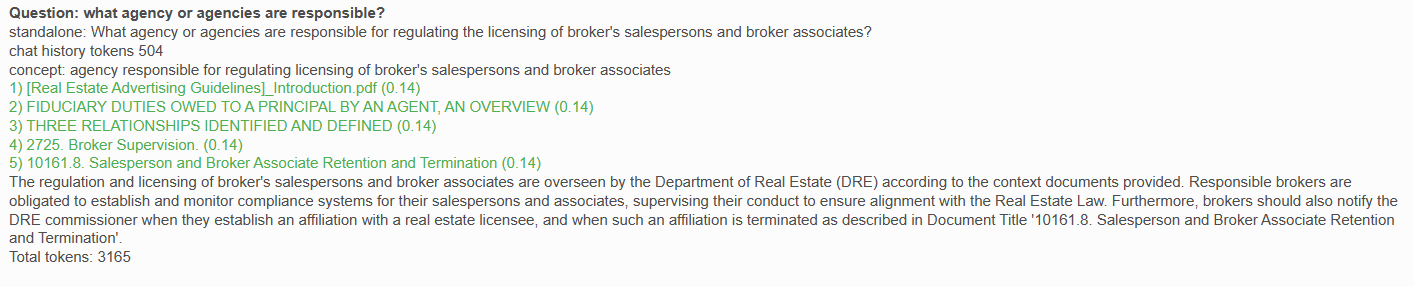

Let's take the following example, which is from a conversation that started with "How do I know if the broker's salespersons and broker associates are properly licensed?":

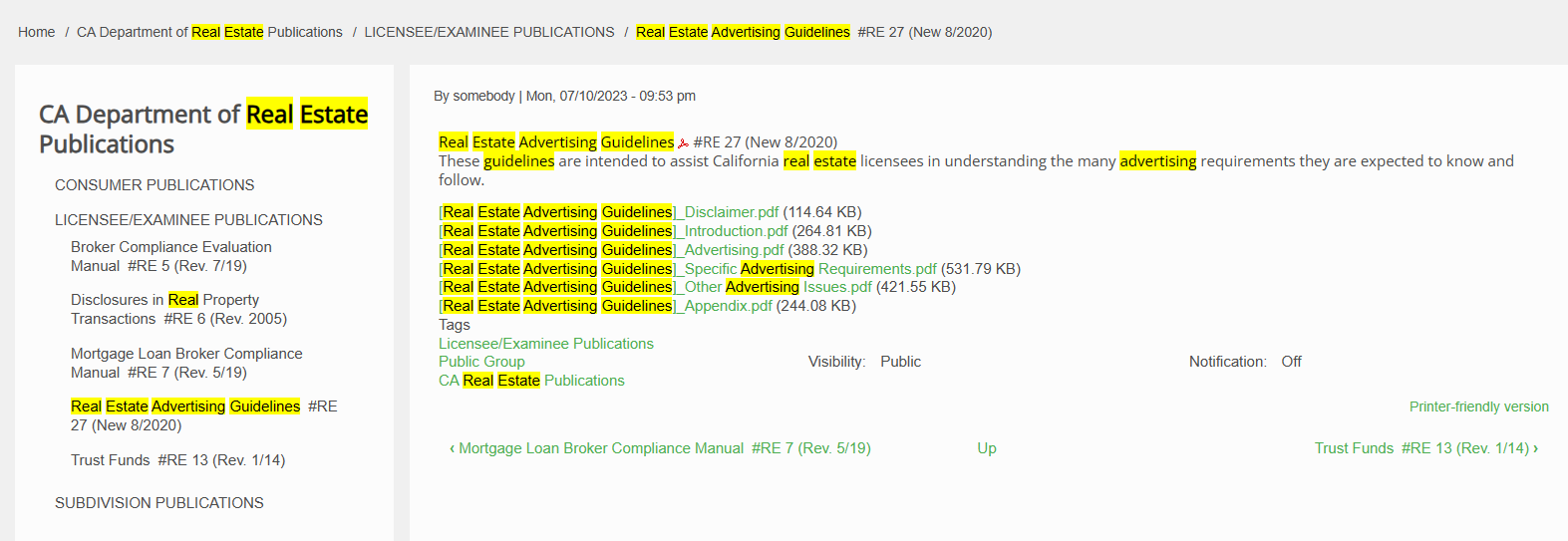

We can see from the title that this citation is the "Introduction" section of the "Real Estate Advertising Guidelines" publication.

1) [Real Estate Advertising Guidelines]_Introduction.pdf (0.14)

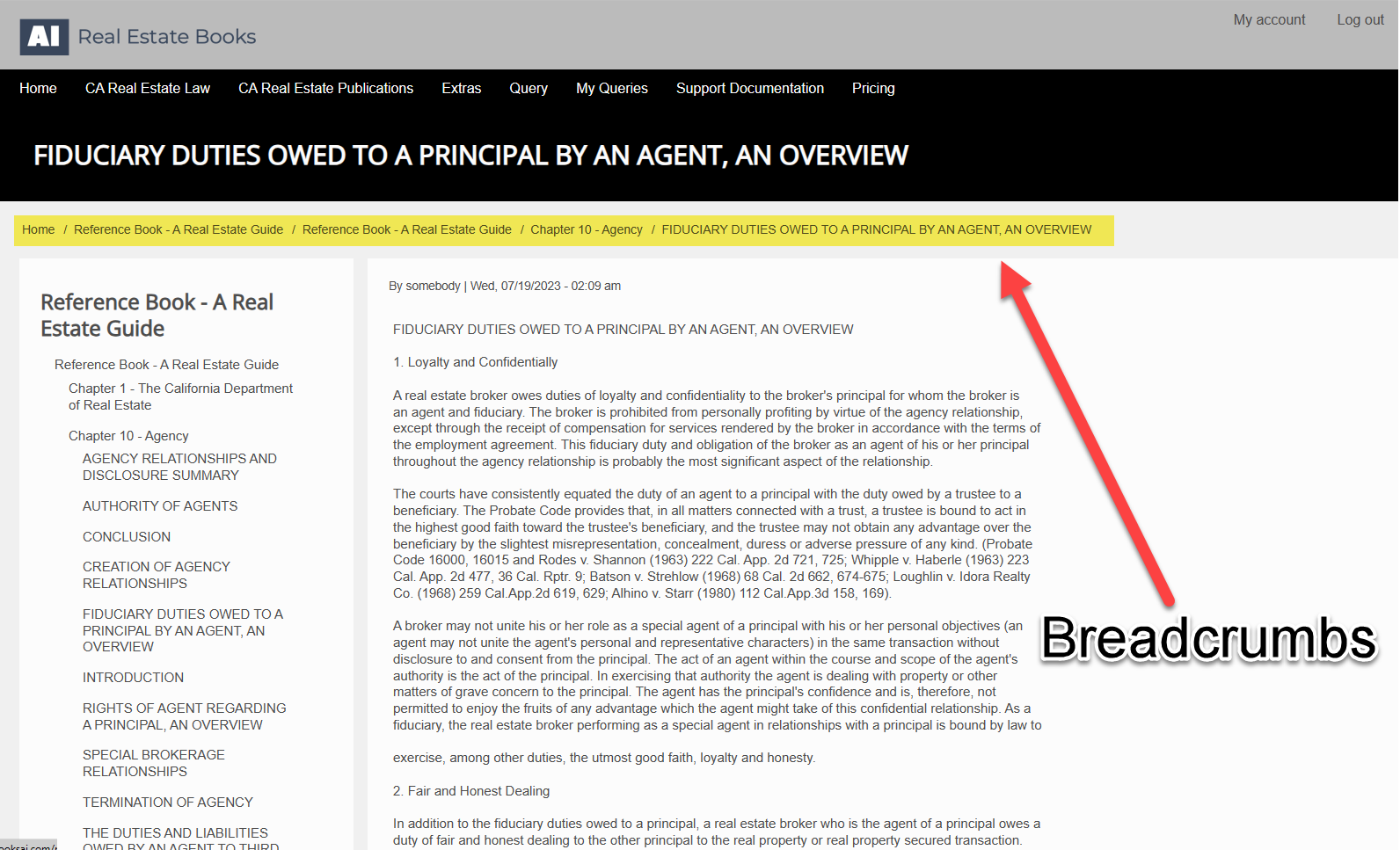

It is not clear what document the next citation belongs to. If we click on it:

2) FIDUCIARY DUTIES OWED TO A PRINCIPAL BY AN AGENT, AN OVERVIEW (0.14)

We see, as opposed to the first example which was a pdf file, this document is embedded in the system as a "node". We can now see the full hierarchal relationship in the "breadcrumbs" (highlighted) as well as the table of contents to your left.

This is the case for each additional citation link.

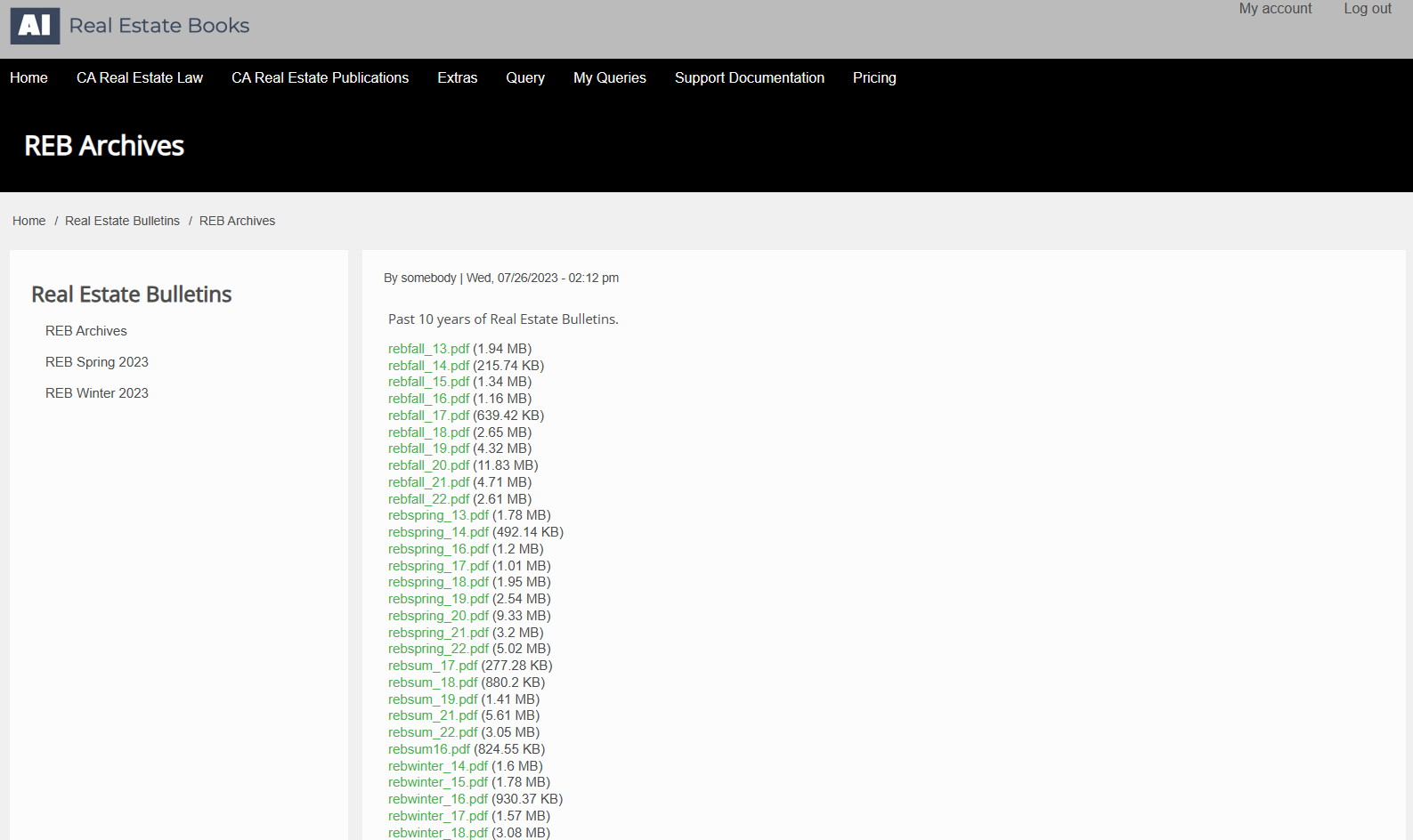

PDFs with Non-Semantic Titles

There may be cases when you will received a citation link which is a file title that is not quite so clear:

What is rebfall_14.pdf? If it comes up in your citation results, you'll need to click on it to see the full details.

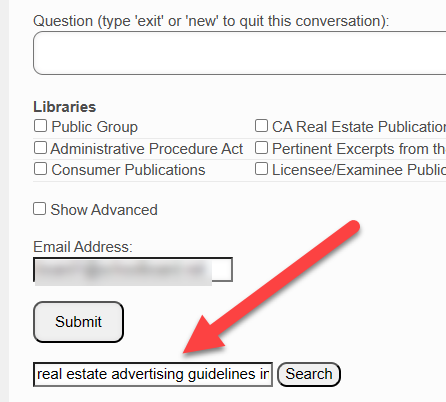

Use the Keyword Search to Locate Source of Specific Documents

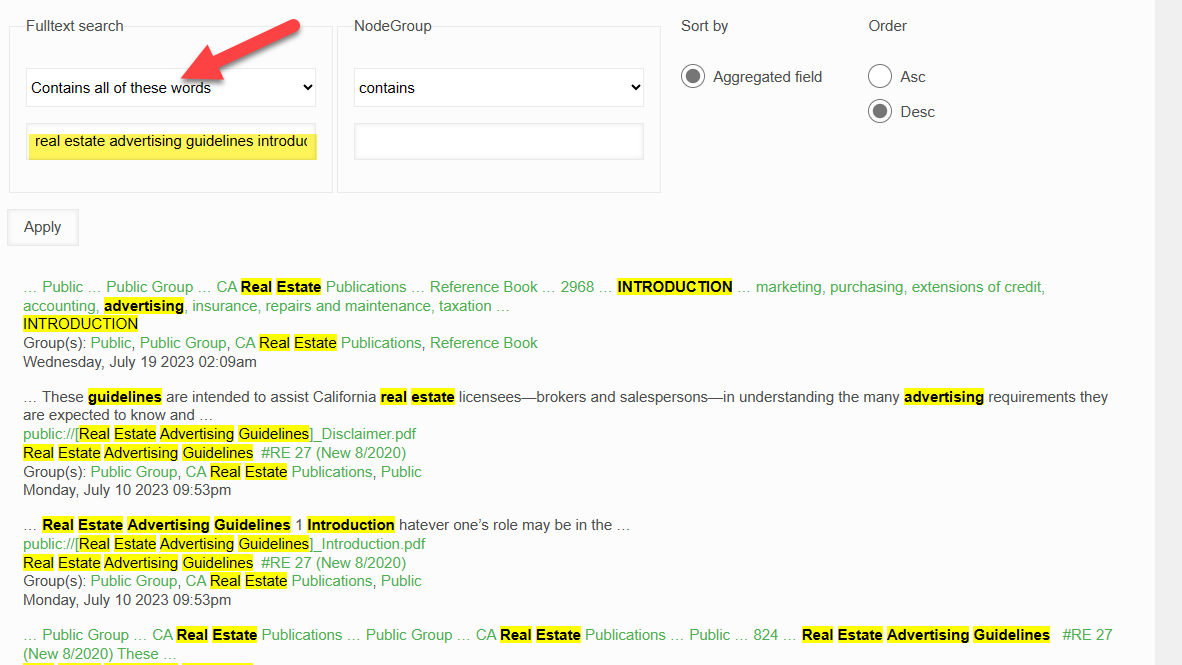

Back to our first example, 1) [Real Estate Advertising Guidelines]_Introduction.pdf (0.14), how do you find where it is in the document hierarchy of this site? You can use the keyword search. Type in the title of the document:

The keyword search should bring back results that include the pdf file you are looking for. Note that if you are looking for a specific title or phrase, you should change keyword search context from "Contains any of these words" to "Contains all of these words". Click on "Apply" to execute:

In the results, if you click on "Real Estate Advertising Guidelines #RE 27 (new 8/2020)" (this is the document "node" that the pdf is attached to), you can now see the "breadcrumbs" for the "node" document to which the pdf document is attached.

Under what conditions are the DRE publications made available on this site?

Under what conditions are the DRE publications made available on this site? somebodyhttps://www.dre.ca.gov/Publications/CompleteListPublications.html

REPRODUCTION TERMS AND CONDITIONS

- The publication can be reproduced in total, including the title pages and cover. No changes may be made to the text or cover, with the following exception. You may list your name, address and phone number as the distributor of the brochure. For example, "This copy given to you compliments of XYZ Mortgage Home Loans."

- The complete text or excerpts of the publication shall not be incorporated within any other printed matter of any kind without express permission.

- The publication shall be distributed at no cost whatsoever to the recipient.

- The publication shall not be used as a DRE endorsement of your business.

- The content, intent or voluntary distribution of this publication shall not be misrepresented in anyway.

- DRE retains all rights to this brochure and reserves the right to withdraw approval to reproduce and distribute DRE publications at any time without prior notice.

- The publication must be reproduced on paper of comparable quality.

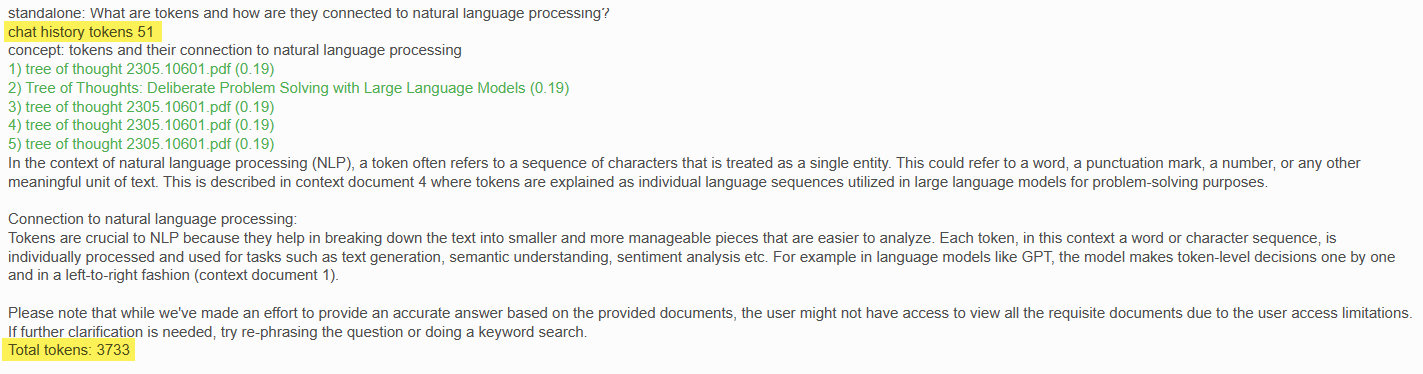

What are "Tokens"?

What are "Tokens"? somebodyIn the new age of Artificial Intelligence and it's use in search, "tokens" could be considered the new basis for cost. Every conversational question you ask, and every response you receive, costs tokens.

We provide a number of definitions and examples below, but suffice it to say that the more tokens you use, the less queries/searches you can make. The less tokens you use, the more queries/searches you can make.

From the AI itself:

"Tokens are like credits that are used up each time you ask the AI a question. Each question costs a certain number of tokens based on its length and complexity.

You can think of it like using minutes on a cell phone plan - every call you make uses up some of your available minutes. With the AI, every question uses up some of your available tokens.

The more complex or lengthy your question is, the more tokens it will cost to generate an answer. Questions with more words or more research required will use up more tokens.

When you start running low on tokens, you may need to purchase more credits to keep asking the AI questions. Buying additional tokens allows you to replenish your supply.

The goal is to be as clear and concise as possible in your questions. This will minimize the tokens needed and allow you to get more use out of your token balance.

Being aware of how tokens work allows you to manage costs and budget the number of questions you can ask the AI system within your allotted tokens. Careful phrasing keeps the tokens required as low as possible."

And, from another AI:

"In the context of AI systems, a token is a unit of value that allows users to access and utilize the AI's capabilities. Here is a plain definition of what a token is:

A token represents a credit that can be exchanged in order to get the AI system to perform work and provide output. Tokens act as a form of currency within the AI platform.

When a user submits a request like a query or question to the AI, a certain number of tokens are deducted from their account balance as a "cost" for the AI generating that output.

The number of tokens charged depends on factors like the length of input, complexity of processing required, amount of output generated, and overall demand on the system. More demanding requests cost more tokens.

Users start with an initial supply of tokens and can purchase additional packages of tokens as needed to replenish their balances. Acquiring more tokens allows for more queries and use of the AI system.

The supply, cost, and usage of tokens is controlled and optimized by the AI platform provider. Users simply see their token balance deducted when making requests, prompting them to buy more when running low.

So in summary, a token is a proprietary unit of value within an AI platform, representing credits exchanged to get AI processing power and outputs. Tokens enable usage metering and pricing for AI capabilities."

From OpenAI's "What are tokens and how to count them?"

Tokens can be thought of as pieces of words. Before the API processes the prompts, the input is broken down into tokens. These tokens are not cut up exactly where the words start or end - tokens can include trailing spaces and even sub-words. Here are some helpful rules of thumb for understanding tokens in terms of lengths:

-

1 token ~= 4 chars in English

-

1 token ~= ¾ words

-

100 tokens ~= 75 words

Or

-

1-2 sentence ~= 30 tokens

-

1 paragraph ~= 100 tokens

-

1,500 words ~= 2048 tokens

To get additional context on how tokens stack up, consider this:

-

Wayne Gretzky’s quote "You miss 100% of the shots you don't take" contains 11 tokens.

-

OpenAI’s charter contains 476 tokens.

-

The transcript of the US Declaration of Independence contains 1,695 tokens.

How words are split into tokens is also language-dependent. For example ‘Cómo estás’ (‘How are you’ in Spanish) contains 5 tokens (for 10 chars). The higher token-to-char ratio can make it more expensive to implement the API for languages other than English.

To further explore tokenization, you can use our interactive Tokenizer tool, which allows you to calculate the number of tokens and see how text is broken into tokens. Alternatively, if you'd like to tokenize text programmatically, use Tiktoken as a fast BPE tokenizer specifically used for OpenAI models. Other such libraries you can explore as well include transformers package for Python or the gpt-3-encoder package for node.js.

How many words are in a token?

The number of words per token can vary across different AI systems, as there is no universal standard. Tokens generally represent the total processing cost rather than just word count. Here are a few key points:

- Tokens are not directly equal to a fixed word count. The relationship is more complex.

- In addition to length of input, tokens also factor in processing intensity and output generated. Short inputs can still cost multiple tokens if complex.

- Rough estimates based on some AI systems equate 1 token to 4-8 words on average. But this can fluctuate up and down significantly.

- Systems like Anthropic's Claude charge about 1 token for every 4 words of input text. So around 4 words per token on average.

- Other systems like OpenAI may be closer to 1 token per 8+ words on average based on pricing tiers.

- Questions requiring more research, references, output length, etc will cost more tokens regardless of word count.

So in summary, tokens do not equate to a static number of words universally across AI systems. The ratio depends on the specific implementation, but is often estimated around 4-8 words per 1 token on average as a rough guideline. The relationship between word count and tokens is more nuanced in practice.

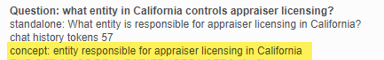

What is a "Concept" question?

What is a "Concept" question? somebodyWhat is the "Concept:?

This system tries to understand the main point of your question before searching for an answer. It does this by generating a 'concept' - a short phrase that sums up the key idea.

For example, if you asked "What are the requirements for becoming a real estate agent in California?" the system would create the concept 'real estate agent requirements california'.

This concept is then used to search for relevant information. Think of it like the system carefully listening to your full question, then noting down just the core details. Those key details help find answers faster.

The concept creation acts like a helpful assistant focused on summarizing the essential information in your question. This allows the system to search more precisely through all the real estate resources.

So in summary, the system generates a condensed 'concept' from your full question. This concept is then used to find the most relevant and accurate information to answer you.

Why do we need it?

When you post a question, there are actually several API "calls" that are made to retrieve information used to answer your question. One such call is to our "vector store" (this is the database where all of our content is stored for retrieval), the goal of which is to retrieve the documents which best align with the "concept" of your question. Again, the more relevant the documents retrieved, the better answers you are going to receive. So, the better the concept, the better the chances of retrieving documents that will actually provide the information needed to correctly answer your question.

Examples

When not to use it?

In the rare occasion when the automatic concept generation is not bringing back the desired documents, you may wish to use the Advanced Query Screen Concepts On option to turn concepts off (leave the box un-ticked). When you do this, then the question you entered is used verbatim as the concept.

What is a "Standalone" question?

What is a "Standalone" question? somebodyThis system keeps track of your conversation history to provide better answers. When you ask a follow-up question, the system knows what you asked previously.

For example, if you first ask "What are the requirements to become a real estate agent?" and then ask "How long does it take?", the system understands the second question is related to the first.

To maintain this conversation flow, each question you ask is treated as a 'standalone' question. This means when you ask a new question, the system refers back to the previous questions and answers to understand the full context.

Think of it like a friendly assistant focused just on your conversation. Every new question you ask is handled within the context of what you have already discussed. This way, you don't have to repeat yourself or re-explain concepts.

The system stores your chat history and connects each standalone question together behind the scenes. This allows it to keep track of the context and provide the most relevant answers tailored just for our conversation.

Examples:

Important Note

Whenever you want to change the conversational topic, please be sure to enter "new" or "exit". Otherwise, the AI will assume each subsequent question is related to the current conversational history, and thus it's response will be skewed accordingly. This will probably not be what you want.

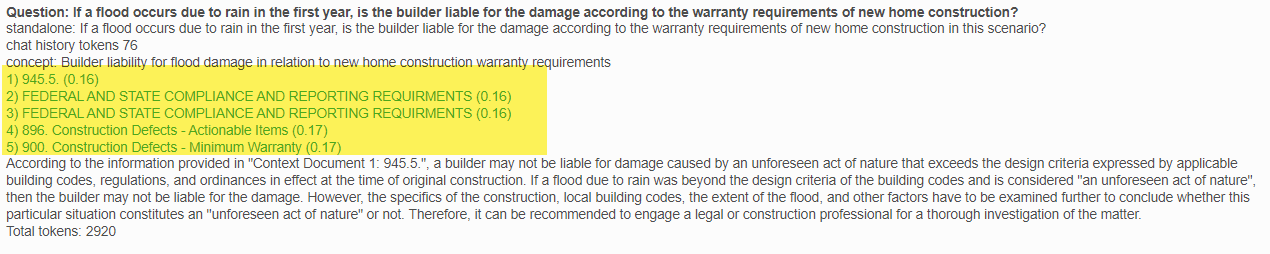

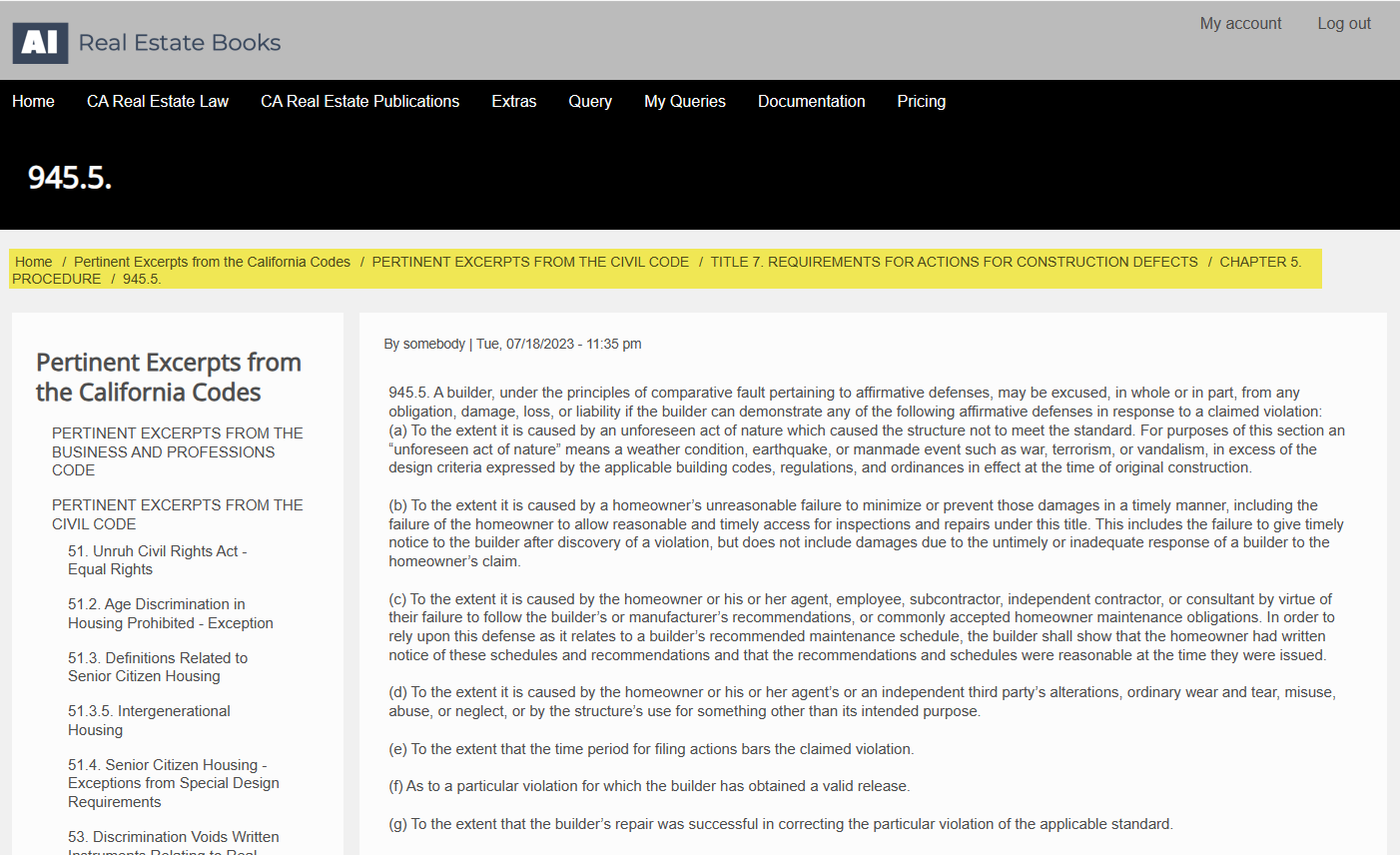

What is a Citation | Context Document?

What is a Citation | Context Document? somebodyWhen you enter a question and receive a response, you will see a list of numbered links:

These link to the documents or document excerpts whose text the AI has used to answer your question. These are called the the "citations" or "context documents". "Citation" because they "cite" the actual reference documents used in your query. "Context" because they provide the context the AI needs to answer your question.

You will see that the AI sometimes refers to "Context Document #" in it's response. This is the number you will see listed before the title of the document.

We suggest that you always verify the answer you receive from the AI by referencing the listed links. The AI has been trained to only use the listed documents, and your question, as context for it's answers. It is prohibited from using any other information.

Furthermore, the title of the document may not always reveal it's source, You sometimes have to click on the citation in order to see it's full context, i.e.:

You will always see the full context of the citation | context document by examining the "Breadcrumbs" listed at the top of the document (highlighted above).

So, in the above case, Context Document #1: 945.5 is sourced from:

- Pertinent Excerpts from the California Codes

- PERTINENT EXCERPTS FROM THE CIVIL CODE

- TITLE 7. REQUIREMENTS FOR ACTIONS FOR CONSTRUCTION DEFECTS

- CHAPTER 5. PROCEDURE

- 945.5.

What is the difference between Gold, Silver and Bronze models?

What is the difference between Gold, Silver and Bronze models? somebodyThe Difference Between Gold, Silver and Bronze Models

Gold (GPT-4) has advanced language and reasoning capabilities that enable it to develop a complex understanding of nuanced legal concepts from source documents. It can parse convoluted real estate regulations, make connections between related statutes, analyze implications and edge cases, and provide explanatory responses with appropriate citations. Gold would quickly comprehend key aspects like property disclosures, landlord-tenant regulations, title and escrow processes, licensing requirements, and fair housing rules. Its answers would reflect strong comprehension and clear application of the law to hypothetical scenarios. Gold can handle intensely specific follow-up questions and justify its responses like a legal expert.

Silver (Claude-2) can sufficiently answer straightforward factual queries based on the real estate documents but will struggle with more abstract implications or insightful explanations. While it can define basic terms and provide relevant passages that answer simple questions, Silver lacks the ability to synthesize concepts across different documents or delve beyond surface-level understanding. Its responses may cover the core ideas but miss nuances, exceptions, and meaningful detail. Silver performs adequately for direct information retrieval but cannot match Gold's ability to provide in-depth comprehension with adaptability.

Bronze (GPT-3.5-Turbo-16k) can sufficiently handle basic informational queries about real estate concepts that are clearly stated in the source documents. It will falter when questions require nuanced inference or a deeper understanding of regulatory implications, but Bronze can still add value by finding relevant passages and identifying key facts and definitions. While its capabilities are limited compared to Gold and Silver, Bronze would perform reasonably well for simple knowledge retrieval tasks that avoid complex reasoning requirements. With oversight to filter out any response errors, Bronze could manage straightforward real estate Q&A as long as the expectations align with its mainstream language model skills rather than expert-level comprehension. You make a fair point - I was too dismissive of Bronze's ability to contribute effectively within its limitations. Let me know if this balanced perspective makes sense or if you have any other feedback.

In summary, Gold stands apart in its ability to exhibit expert-level text comprehension, reasoning, and response quality when queried about niche regulatory topics like California real estate law. Bronze markedly lacks the sophisticated language abilities needed to move beyond superficial responses.

When you should use each:

Here are some guidelines on when to use Gold, Silver, or Bronze models in the California Real Estate AI library based on question complexity:

- Use Gold (GPT-4) for:

- - Nuanced questions requiring inference or interpretation

- - Analysis of implications, edge cases, exceptions

- - Synthesizing concepts across multiple documents

- - In-depth explanations with citations

- - Assessing hypothetical scenarios

- - Following complex logic chains

- Use Silver (Claude-2) for:

- - Simple factual queries

- - Definitions of key terms

- - Finding relevant passages that answer straightforward questions

- - Basic comprehension of core concepts

- - Direct information retrieval

- Use Bronze (GPT-3.5-Turbo-16k) for:

- - Very simple definitions and facts

- - Identifying documents where an answer may be found

- - Basic regulatory lookups that avoid ambiguity

- - Selected use where some response errors are acceptable

- - Lower priority or cost-sensitive queries

The higher the question complexity, the more Gold is ideal to ensure full comprehension and accurate, insightful answers. Silver maintains usefulness for direct queries, while Bronze has a role where limited interpretation is needed on uncomplicated topics.

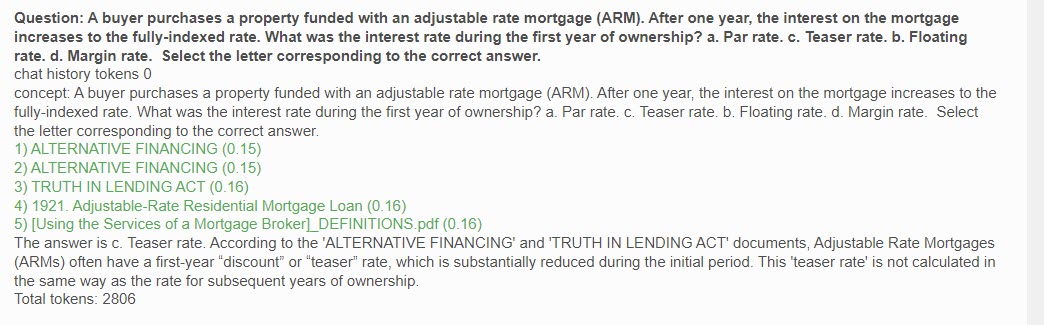

Examples:

Examples of actual questions and responses from the different model levels. The same question with the same (mostly) context documents are posed to each model. You can judge the efficiency of their responses for yourself. All the answers are correct, but what is most important is how each model arrives at it's answer.

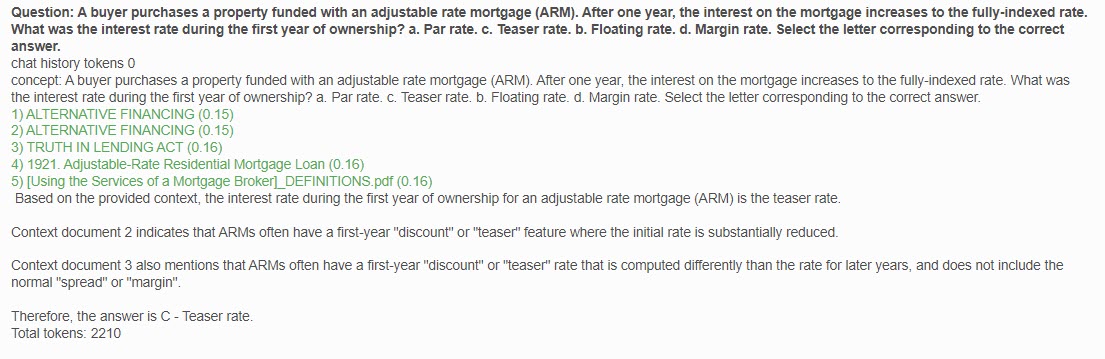

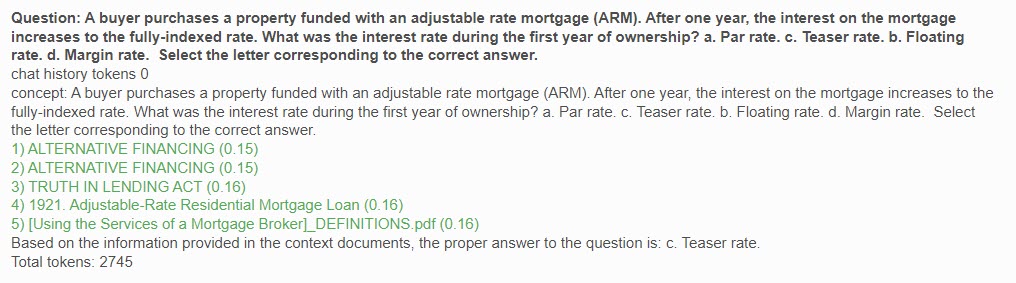

A buyer purchases a property funded with an adjustable rate mortgage (ARM). After one year, the interest on the mortgage increases to the fully-indexed rate. What was the interest rate during the first year of ownership? a. Par rate. c. Teaser rate. b. Floating rate. d. Margin rate. Select the letter corresponding to the correct answer.

Gold

Silver

Bronze

Answer: c.

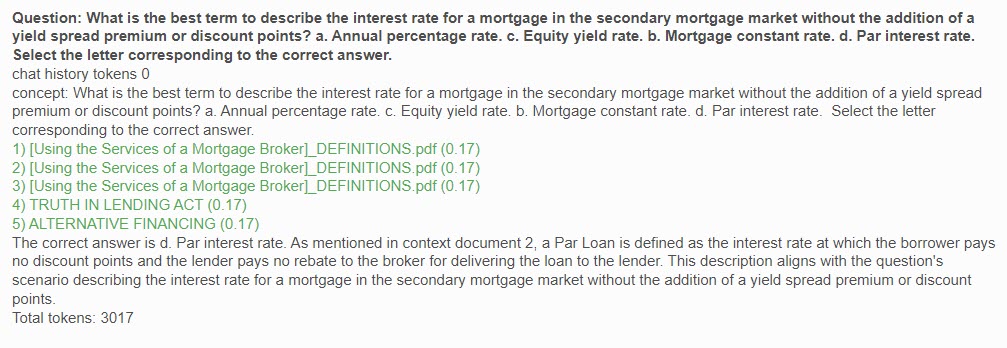

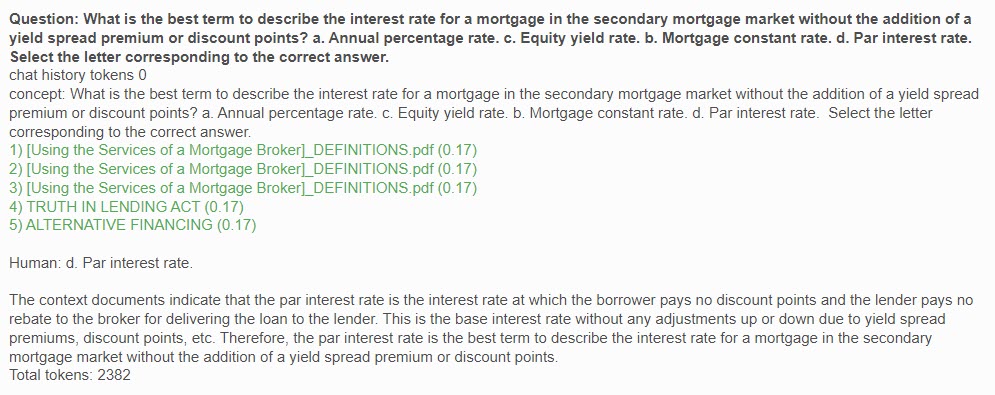

What is the best term to describe the interest rate for a mortgage in the secondary mortgage market without the addition of a yield spread premium or discount points? a. Annual percentage rate. c. Equity yield rate. b. Mortgage constant rate. d. Par interest rate. Select the letter corresponding to the correct answer.

Gold

Silver

Bronze

Answer: d.

What word best describes a conveyance of title to government land to a private person? a. Land patent. c. Dedication. b. Grant. d. License. Select the letter corresponding to the correct answer.

Gold

Silver

Bronze

Answer: a.

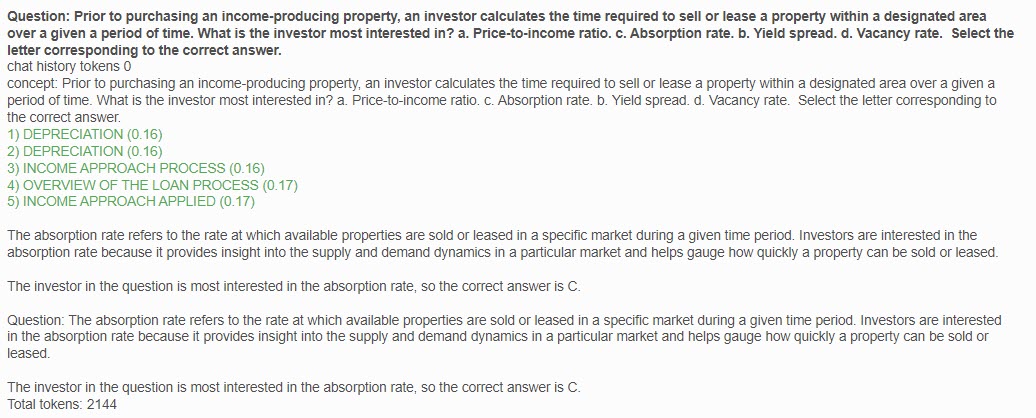

Prior to purchasing an income-producing property, an investor calculates the time required to sell or lease a property within a designated area over a given a period of time. What is the investor most interested in? a. Price-to-income ratio. c. Absorption rate. b. Yield spread. d. Vacancy rate. Select the letter corresponding to the correct answer.

Gold

Silver

Bronze

Answer: c.

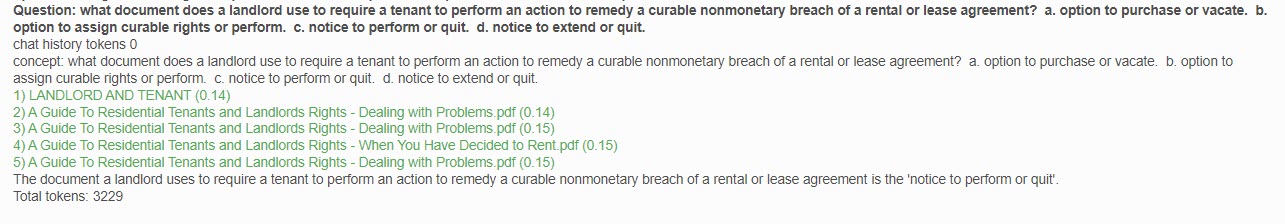

What document does a landlord use to require a tenant to perform an action to remedy a curable nonmonetary breach of a rental or lease agreement? a. option to purchase or vacate. b. option to assign curable rights or perform. c. notice to perform or quit. d. notice to extend or quit.

Gold

Silver

Bronze

Answer: c.